Tutorial: Event Capture to Analytics

Are you a developer that is about to launch a dApp and you want your dApp interface to show up-to-date data?

With ZettaBlock, you can easily do that. As long as your contract is verified on a main -scan explorer, like EtherScan/PolygonScan, you will be able to find event data decoded with us.

But even if it is not a verified contract, our decoding algorithm looks for common patterns across networks, so if your contract was deployed on a different network before, we will attempt to decode it on every network we capture.

Here’s how you can find Compound Finance supply/withdrawal events for USDC, on Polygon.

How to create your own custom logic to display on your dApp

Step 1: SQL query to find supply/withdraw events

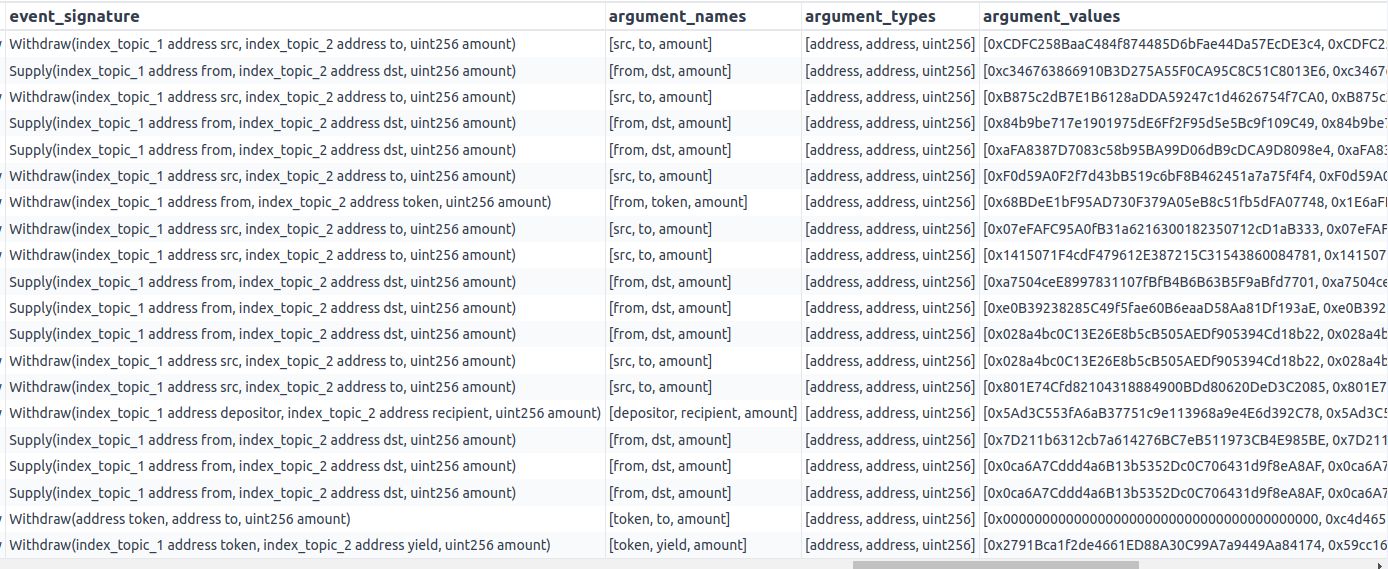

SELECT

*

FROM polygon_mainnet.logs as logs

WHERE 1=1

AND data_creation_date >= DATE('2023-03-07')

AND try(topics[1]) IN ('0xd1cf3d156d5f8f0d50f6c122ed609cec09d35c9b9fb3fff6ea0959134dae424e','0x9b1bfa7fa9ee420a16e124f794c35ac9f90472acc99140eb2f6447c714cad8eb')

AND logs.contract_address = '0xf25212e676d1f7f89cd72ffee66158f541246445' -- Compound USDC (cUSDCv3)Step 2: Putting it all together: event capture logic + price, to generate metrics

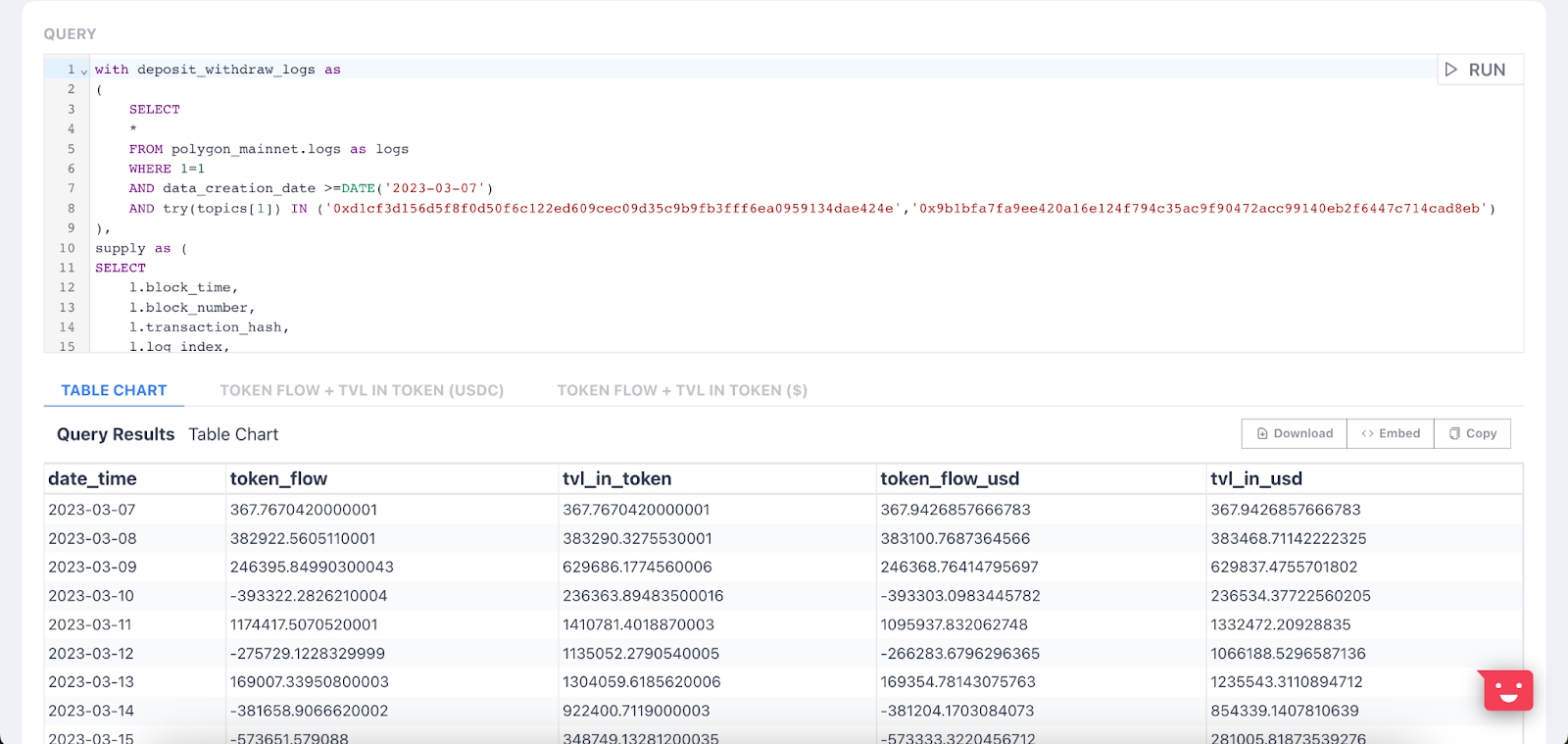

with deposit_withdraw_logs as

(

SELECT

*

FROM polygon_mainnet.logs as logs

WHERE 1=1

AND data_creation_date >=DATE('2023-03-07')

AND try(topics[1]) IN ('0xd1cf3d156d5f8f0d50f6c122ed609cec09d35c9b9fb3fff6ea0959134dae424e','0x9b1bfa7fa9ee420a16e124f794c35ac9f90472acc99140eb2f6447c714cad8eb')

AND logs.contract_address = '0xf25212e676d1f7f89cd72ffee66158f541246445' -- Compound USDC (cUSDCv3)

),

supply as (

SELECT

l.block_time,

l.block_number,

l.transaction_hash,

l.log_index,

lower(t.contract_address) as contract_address_tf,

lower(l.contract_address) as contract_address,

lower(l.argument_values[1]) as from_address_evt,

lower(l.argument_values[2]) as to_address_evt,

l.argument_values[3] as amount,

t.symbol,

t.decimals,

CAST(l.argument_values[3] AS DOUBLE)/CAST(POW(10,t.decimals) AS DOUBLE) as token_amount,

l.data_creation_date

FROM deposit_withdraw_logs as l

INNER JOIN polygon_mainnet.erc20_evt_transfer as t ON t.transaction_hash=l.transaction_hash AND l.argument_values[3]=t.value

AND lower(t.contract_address) = '0x2791bca1f2de4661ed88a30c99a7a9449aa84174'

WHERE t.data_creation_date >= DATE('2023-03-07')

AND try(l.topics[1]) = '0xd1cf3d156d5f8f0d50f6c122ed609cec09d35c9b9fb3fff6ea0959134dae424e'

AND lower(l.contract_address) = '0xf25212e676d1f7f89cd72ffee66158f541246445'

),

withdraw as (

SELECT

l.block_time,

l.block_number,

l.transaction_hash,

l.log_index,

lower(t.contract_address) as contract_address_tf,

lower(l.contract_address) as contract_address,

lower(l.argument_values[1]) as from_address_evt,

lower(l.argument_values[2]) as to_address_evt,

l.argument_values[3] as amount,

t.symbol,

t.decimals,

CAST(l.argument_values[3] AS DOUBLE)/CAST(POW(10,t.decimals) AS DOUBLE) as token_amount,

l.data_creation_date

FROM deposit_withdraw_logs as l

INNER JOIN polygon_mainnet.erc20_evt_transfer as t ON t.transaction_hash=l.transaction_hash AND l.argument_values[3]=t.value

AND lower(t.contract_address) = '0x2791bca1f2de4661ed88a30c99a7a9449aa84174'

WHERE t.data_creation_date >= DATE('2023-03-07')

AND try(l.topics[1]) = '0x9b1bfa7fa9ee420a16e124f794c35ac9f90472acc99140eb2f6447c714cad8eb'

AND lower(l.contract_address) = '0xf25212e676d1f7f89cd72ffee66158f541246445'

),

prices as

(

select

minute,

avg(price) as price,

lower(symbol) as symbol

FROM prices.usd

WHERE data_creation_date >= DATE('2023-03-07')

AND lower(symbol) IN ('usdc')

group by 1,3

),

with_price as

(

SELECT

w.*,

'withdraw' as action,

p.price

FROM withdraw as w

INNER JOIN prices as p

ON DATE_TRUNC('minute',w.block_time)=DATE_TRUNC('minute',p.minute)

UNION DISTINCT

SELECT

s.*,

'supply' as action,

p.price

FROM supply as s

INNER JOIN prices as p

ON DATE_TRUNC('minute',s.block_time)=DATE_TRUNC('minute',p.minute)

),

final_table as

(

SELECT

block_time,

block_number,

transaction_hash,

log_index,

contract_address_tf,

contract_address,

from_address_evt,

to_address_evt,

amount,

symbol,

decimals,

token_amount,

token_amount*price as amount_usd,

action,

data_creation_date

FROM with_price

)

-- Create Metric to capture inflows from the contract daily + cumulative flows (TVL)

SELECT

date_time,

token_flow,

SUM(token_flow) OVER (order by date_time) as tvl_in_token,

token_flow_usd,

SUM(token_flow_usd) OVER (order by date_time) as tvl_in_usd

FROM

(

SELECT

data_creation_date as date_time,

SUM(CASE WHEN action='supply' THEN token_amount ELSE -token_amount END) as token_flow,

SUM(CASE WHEN action='supply' THEN amount_usd ELSE -amount_usd END) as token_flow_usd

FROM final_table

GROUP BY 1

)

ORDER BY 1 DESC

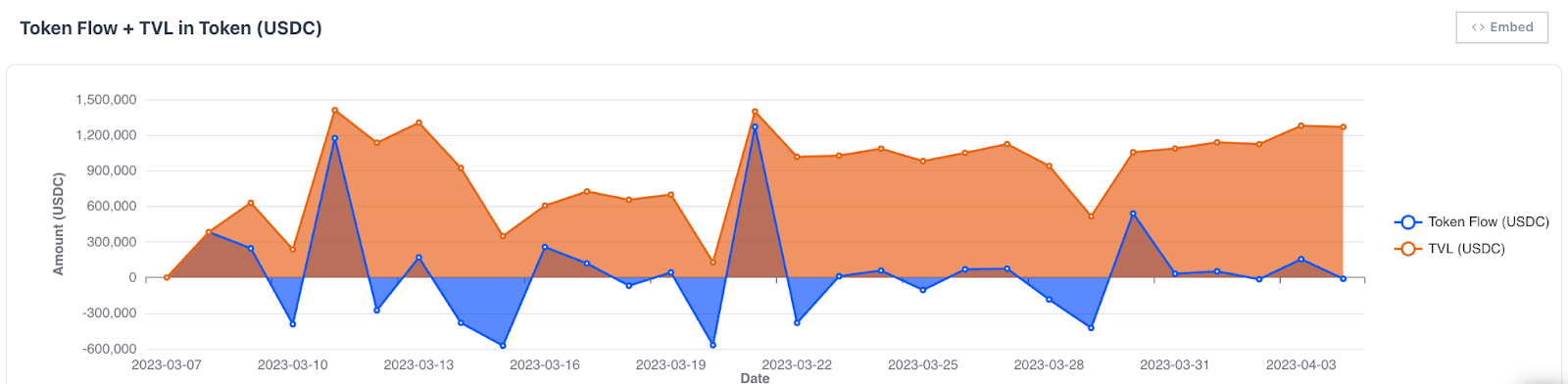

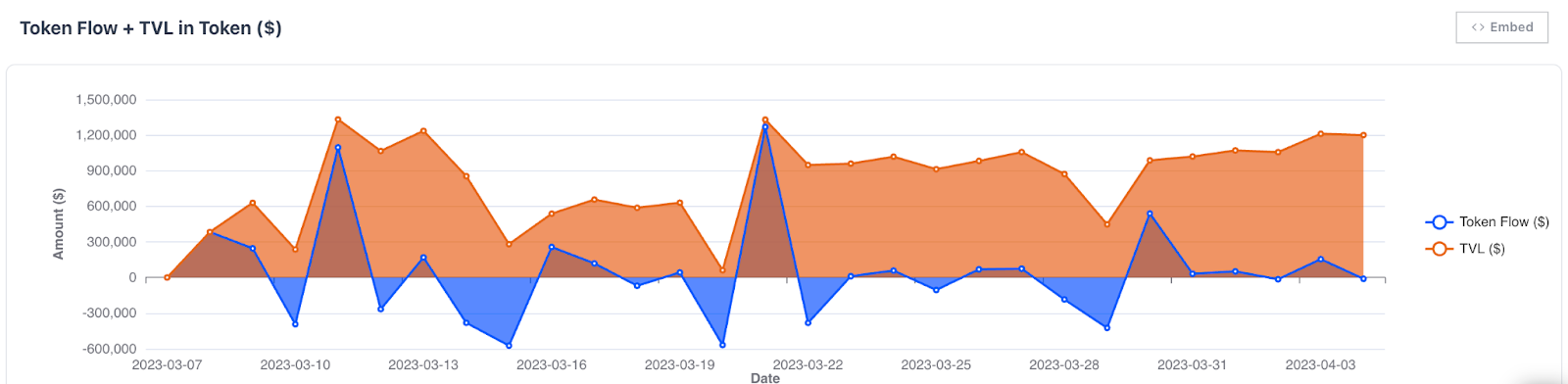

Step 3: Verifying the results

Step 4: Creating the API

- Select your desired refresh rate and the indexed fields.

- Enable Incremental Refresh

- Keep your API transformation code as it was. Within the Incremental SQL code, change:

DATE(data_creation_date) >= DATE(‘2023-03-07’) To:

DATE(data_creation_date) >= CURRENT_DATE - INTERVAL '3' DAYKeep in mind that this change has to be done for every time data_creation_date occurs within the query. To learn more about Incremental refresh, visit this document.

The API is now ready!

Updated 4 months ago