Data Export Quickstart

Learn how to export data from ZettaBlock for offline usage, including analysis, backup, or integration into external systems.

Currently, you can export data in the following ways:

- via a CSV file download

- via Analytics API (if CSV download is too manual)

- via GraphQL API (to surface into your own dApp)

- via an ETL pipeline

- via webhooks (coming soon)

- via Kafka Streams (coming soon)

- by connecting to the Data Lake via a Jupyter Notebook (doc).

Option | Limit | Summary | Common Use Case |

|---|---|---|---|

CSV Download | 5MB/ query | Click the download button after executing the SQL query. | Download SQL query results into CSV within the UI. |

Analytics API |

|

| Get data from ad-hoc queries: |

Transactional/ GraphQL API |

|

| Serve data streams to dApps/ apps directly, in need of low latency (10ms) support. |

ETL pipeline* | No |

| The default choice for the analytics use case. |

*To discuss the option of exporting data via ETL Pipeline, contact our team directly.

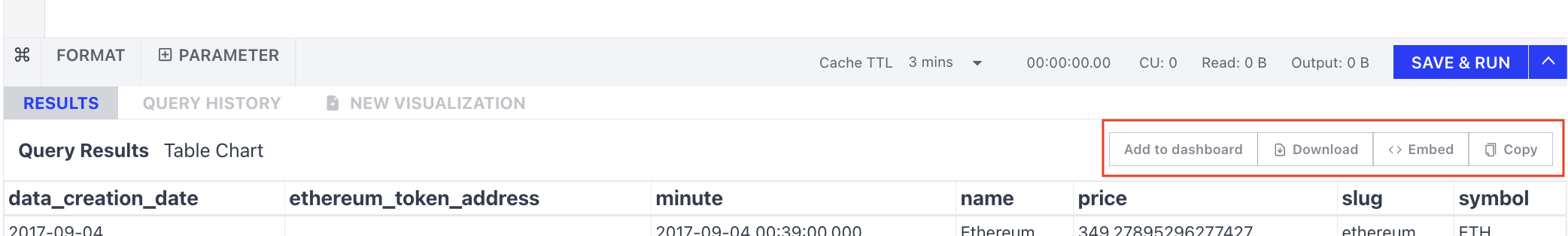

CSV Download

To download a CSV file of your query results, simply navigate to this panel:

Updated 5 months ago